By 2025, AI sentiment analysis isn’t just about reading tweets or product reviews anymore. It’s learning to understand sarcasm in a customer’s complaint, detecting frustration in a voice call, and even reading micro-expressions in video support chats-all while running on blockchain-secured data pipelines. This isn’t science fiction. It’s already reshaping how businesses interact with customers, especially in decentralized ecosystems where trust and transparency matter more than ever.

How AI Sentiment Analysis Works Today

Modern AI sentiment analysis doesn’t just count positive or negative words. It uses large language models like GPT-4, fine-tuned with emotional context, to detect subtle cues: hesitation in phrasing, urgency in tone, or cultural idioms that change meaning across regions. A phrase like "This is fine" might mean calm acceptance in one context, or quiet rage in another. These systems now combine text, voice pitch, facial movements, and even typing speed to build a full emotional profile. For example, a customer typing "I’m okay" after a long support wait, with slow keystrokes and a flat voice tone, triggers a different response than someone typing "I’m okay!!" with rapid bursts and an upbeat voice. The AI doesn’t just label it as "neutral"-it flags it as "high risk of churn" and routes the case to a human supervisor. Companies like Crescendo.ai now analyze 100% of customer interactions, not just the 5% who fill out surveys. That’s a game-changer.Why Blockchain Matters for Sentiment Data

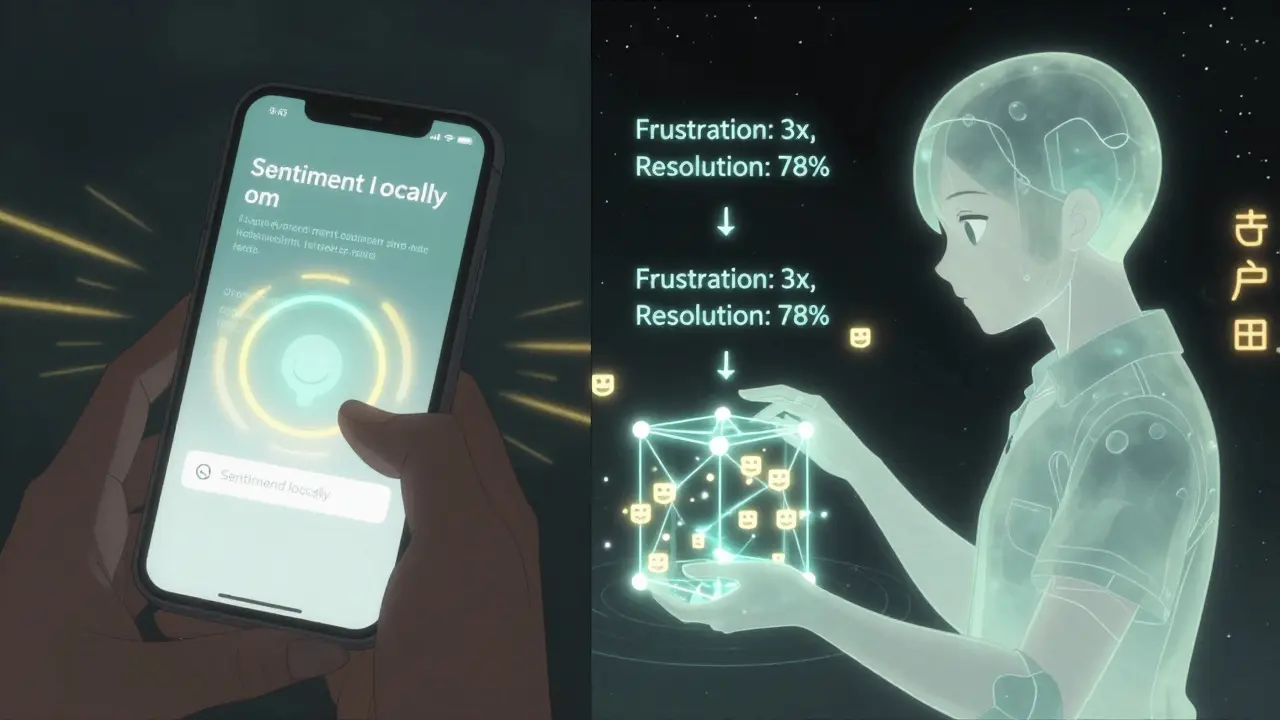

Here’s where blockchain steps in. Most sentiment analysis tools today rely on centralized servers that collect, store, and process user data. That creates serious privacy risks. What if a company sells your emotional data? What if a breach exposes your angry customer service logs? Blockchain changes that. Decentralized sentiment platforms now let users control their own data. Instead of sending your chat logs to a corporate server, your phone or smart device processes sentiment locally. Only an encrypted, anonymized summary-like "User expressed frustration 3x this week, resolution rate: 78%"-gets recorded on a blockchain. This means companies still get the insights they need to improve service, but users retain ownership. No middlemen. No data sales. No hidden tracking. Several blockchain-based projects, like SingularityNET and Ocean Protocol, are already testing this model. They allow AI models to be trained on encrypted sentiment data without ever seeing the raw input. Think of it like a locked box: the AI can shake the box and tell you what’s inside, but never opens it.Real-World Use Cases in 2025

In crypto communities, sentiment analysis is now critical. Token prices don’t just move on news-they move on emotion. A sudden spike in negative sentiment across Twitter, Reddit, and Discord can signal a dump before it hits the charts. Projects like Hivemind Analytics use AI to scan 500+ crypto communities daily, tracking sentiment trends and correlating them with on-chain activity. If a popular influencer’s post triggers a 40% surge in angry comments, the system alerts traders and DAO governance boards before price drops. Customer service in Web3 is also evolving. Decentralized autonomous organizations (DAOs) now use AI agents to handle support tickets. These agents don’t just answer FAQs-they detect when a member is frustrated, confused, or feeling excluded. If someone says, "I’ve been waiting 3 weeks and no one cares," the AI doesn’t just auto-reply with a link. It recognizes the emotional weight, flags the issue as critical, and escalates it to a human moderator within minutes. This reduces churn in community-driven projects by up to 35%. Even in NFT marketplaces, sentiment analysis is helping creators. Platforms like Foundation now analyze comments on new drops. If a collection gets flooded with comments like "overpriced," "rip-off," or "this feels lazy," the system alerts the artist-not to delete the post, but to engage. Some artists now reply publicly with behind-the-scenes footage or price justification, turning negative sentiment into community trust.

Challenges and Pitfalls

It’s not perfect. AI still struggles with cultural nuance. A joke in Indian English might read as hostile to a U.S.-trained model. Sarcasm remains a blind spot-"Oh great, another outage" can be misread as genuine praise. And bias? It’s still a problem. If training data comes mostly from English-speaking users in North America, the system won’t understand the emotional tone of a Spanish-speaking customer in Mexico or a Mandarin speaker in Guangdong. There’s also the "black box" issue. If an AI denies a user’s support request based on sentiment, how do you know why? Blockchain helps here too. Some platforms now log AI decisions on-chain as transparent, auditable events. You can trace: "Sentiment score: -0.82 → Reason: High frustration keywords + low resolution history → Action: Escalated." That level of accountability is rare elsewhere. And cost? Advanced multimodal systems still need teams of data scientists, engineers, and domain experts. Small crypto projects can’t afford $500,000 AI setups. But open-source tools like Hugging Face’s sentiment models, combined with decentralized compute networks (like Akash or Render), are bringing costs down. You can now run a basic sentiment analyzer on a $20/month cloud server.What’s Next: 2026-2033

By 2030, AI sentiment analysis will be as common as email signatures. Here’s what’s coming:- Emotion-aware AI agents will handle entire customer journeys-not just answering questions, but sensing when you’re stressed and slowing down responses, or when you’re excited and offering bonus content.

- Blockchain-secured emotion wallets will let users store their emotional history: "I felt anxious during this support call on March 12, 2026," and choose which companies can access it.

- On-chain sentiment indexes will track community mood for tokens, NFTs, and DAOs like stock market indices-giving investors real-time emotional market signals.

- Edge AI + blockchain will let your smartwatch analyze your stress levels during a crypto trade and warn you: "Your heart rate spiked when you saw the price drop. Want to pause?"

How to Get Started

If you’re a crypto project, DAO, or Web3 startup:- Start with text-only sentiment. Use free tools like Hugging Face’s transformers or Google’s Natural Language API on your Discord or Telegram logs.

- Track keywords: "refund," "scam," "slow," "help," "thanks." See where frustration clusters.

- Integrate with a decentralized storage layer like IPFS to keep raw data off centralized servers.

- Once you have 10,000+ interactions, explore multimodal options-voice tone analysis via Whisper, facial analysis via open-source CV models.

- Consider a blockchain-based sentiment protocol like SingularityNET for transparent, user-owned insights.

Final Thought

AI sentiment analysis isn’t about replacing humans. It’s about giving humans better information. In blockchain, where trust is built through transparency-not logos or ads-understanding how people *feel* is the ultimate competitive edge. The future belongs to projects that don’t just track transactions, but truly listen.Can AI sentiment analysis be trusted in crypto communities?

Yes, but with oversight. AI is great at spotting patterns-like a sudden spike in negative keywords across forums-but it can misread sarcasm, cultural phrases, or humor. The best approach combines AI alerts with human review. For example, if 200 people say "this is a scam," the AI flags it. A moderator then reads a sample of those posts to confirm context. This hybrid model cuts false alarms by up to 60%.

How does blockchain improve sentiment data privacy?

Traditional platforms collect your chat logs, emails, and voice recordings on their servers. Blockchain lets you keep your raw data on your device. Only a hashed, anonymized summary-like "User expressed frustration 3 times this week, resolved in 2 days"-is stored on-chain. Companies get insights without ever seeing your personal messages. This meets GDPR and emerging Web3 privacy standards.

Is multimodal sentiment analysis worth the cost for small crypto projects?

Not yet. Multimodal systems (voice + face + text) require expensive hardware and expertise. For small teams, stick with text analysis from Discord, Twitter, and Reddit. Use free APIs. Once you have 50,000+ interactions and a growing support team, then consider adding voice tone analysis. The ROI isn’t there until scale.

Can sentiment analysis predict crypto price movements?

Not directly-but it can signal risk. A 30% surge in negative sentiment across major crypto communities often precedes a price drop by 12-48 hours. It’s not a crystal ball, but it’s a leading indicator. Projects like Hivemind Analytics correlate sentiment spikes with on-chain sell-offs, giving traders early warnings. Still, always combine it with volume data and news events.

What’s the easiest way to start using AI sentiment analysis?

Export your Discord or Telegram chat logs (last 30 days) and upload them to Hugging Face’s free sentiment analyzer. It’ll label each message as positive, negative, or neutral. Look for clusters of negative sentiment around specific topics-like "withdrawal delays" or "token burn confusion." Fix those issues first. You’ll see immediate improvements in community trust.

18 Responses

It's wild how we're turning human emotion into data points you can chart on a dashboard. We used to talk about empathy in customer service-now it's just a score between -1 and 1. I'm not saying it's bad, but it feels like we're outsourcing our humanity to algorithms that don't even understand why we cry at dog videos.

And yet… it works. I’ve seen companies turn around entire communities just by responding to the quiet ones-the ones typing "I'm okay" with slow keystrokes. That’s not magic. That’s attention.

Blockchain just makes it ethical. No more shady data brokers selling your rage as a commodity. You own your frustration now. That’s revolutionary.

Still, I worry we’re building a world where machines listen better than our partners do. Maybe that’s the real test: when AI detects your silent anger before you do, what do you do with it?

Do you fix it? Or do you just mute the notification and keep scrolling?

I love that this is happening but I’m also terrified. What happens when the AI starts telling people they’re being too emotional? Like, what if your sigh in a voice call gets flagged as ‘low engagement potential’ and you get downgraded in the system? We’re not widgets. We’re messy, contradictory, tired humans. I just hope someone’s building in room for that.

Oh wow, so now my passive-aggressive "cool story bro" is going to be analyzed for latent hostility? Brilliant. Next they’ll read my tone when I say "sure, whatever" to my roommate and send a DAO vote to evict me.

But honestly? I’m here for it. If this means my support tickets get answered before I start drafting my Yelp review, count me in. Just don’t let the AI start giving me life advice. I don’t need a bot telling me I’m "emotionally avoidant" because I didn’t reply to a DM for 48 hours.

Can we just pause for a second and appreciate how far we’ve come? Five years ago, we were still arguing whether chatbots should use exclamation points. Now we’re building systems that detect sarcasm in crypto Twitter and correlate it with on-chain sell-offs.

And it’s not even about the tech-it’s about the shift. We’re finally treating emotion as data worth respecting, not just mining.

I’ve worked in support for a decade. I’ve seen people cry on calls, rage in DMs, and whisper "I just want to be heard" in a thread no one reads. This? This is the first time I’ve seen tech that actually listens.

Yes, it’s imperfect. Yes, bias exists. But if we keep iterating, keep auditing, keep giving users control… this could be the most human thing the tech industry has ever built.

Don’t underestimate the power of being seen. Even by a machine.

I’m skeptical. The idea of owning your emotional data sounds noble, but most people won’t understand what that means. They’ll click "agree" without reading. And companies will still find ways to monetize the summaries. Blockchain doesn’t fix bad incentives. It just makes them more opaque.

Also, "emotion wallets"? That’s not a feature. That’s a marketing buzzword with a side of dystopia.

Start with Hugging Face. Free. Easy. Do it.

I’ve been thinking about this a lot since I saw my own sentiment score drop after a 3-hour support call last month. The AI flagged me as "high churn risk" because I said "I’m fine" three times in a row with long pauses between each. I wasn’t fine. I was exhausted. I didn’t want to fight anymore.

But here’s the thing-the system didn’t just flag me. It triggered a personal note from a human rep who said, "We noticed you’ve been patient through a lot. We’re sorry you’ve had to wait. Here’s a refund and a free upgrade. No questions asked."

That’s the magic. Not the AI. Not the blockchain. The moment the machine recognized my silence and handed me to someone who could hear it.

That’s the future. Not the algorithms. The humans who get to act on them.

I don’t know if this will scale. But I do know that when tech makes you feel less alone, it’s worth fighting for. Even if it’s imperfect. Even if it’s noisy. Even if it’s still learning how to understand us.

And honestly? I think we’re all just trying to be heard. Even the ones typing "I’m okay." I just hope we don’t forget that.

Bro this is the future and I’m here for it 😍

Imagine your smartwatch buzzing during a crypto dump like "Hey, your heart’s racing. Want me to pause your trade?" That’s not sci-fi. That’s Tuesday.

And the blockchain part? Genius. My emotional data is mine. Not some VC’s spreadsheet. I’m not selling my frustration for ad revenue.

Also, if AI can detect when I’m being sarcastic on Reddit, maybe it can finally tell my mom I’m not actually mad when I say "yeah sure mom"

we’re living in the future and I didn’t even buy a ticket

lol this is so stupid. You think AI can read sarcasm? Last week I said "oh great another airdrop" and the bot flagged it as "positive sentiment" and sent me a thank you email. I’m not even mad. I’m impressed. This is what happens when you let engineers build emotional intelligence with a dataset of 1000 tweets from 2017.

And blockchain? Please. You think some hashed summary is going to stop a company from re-identifying you from your typing speed and word choice? That’s not privacy. That’s theater.

Also, who gave this guy a mic? This reads like a TED Talk written by a startup that just got funded.

Wake up. We’re not building a better world. We’re just making the same old exploitation look prettier.

Why does everything have to be American tech? In Nigeria, we know emotion. We don’t need AI to tell us when someone is angry. We read the tone. We read the silence. We read the proverbs.

You think your blockchain can capture the weight of "I no dey cry, but I dey die inside"? You think your model trained on Twitter can understand a Yoruba grandmother saying "I’m fine" after her son lost his job?

This isn’t innovation. It’s cultural arrogance wrapped in code.

The ethical implications of this technology are profound and require careful, multidimensional consideration. While the potential for enhancing customer experience through emotionally intelligent systems is undeniable, we must also acknowledge the risks of algorithmic overreach, the commodification of affective states, and the potential erosion of human agency in interpersonal communication.

Furthermore, the integration of blockchain for data sovereignty introduces a compelling framework for accountability, yet it is not without its own challenges-particularly regarding scalability, energy consumption, and the potential for centralized governance structures to emerge within ostensibly decentralized ecosystems.

It is imperative that we do not conflate technological capability with moral imperative. Just because we can analyze emotion at scale does not mean we should. The question is not whether this system works-but whether it aligns with our values as a society.

And if we are to proceed, we must ensure that the voices of marginalized communities are not only included in the training data, but that they are actively shaping the design, governance, and ethical boundaries of these systems.

Otherwise, we risk building a future that listens-but never truly hears.

Just use Hugging Face. Free. Works. Done.

This is all fake. AI can't feel. Blockchain is just a database. People are dumb. Stop wasting time. Just fix the damn website.

Oh, so now we’re giving AI the power to interpret our emotions and then deciding who gets help based on some algorithm’s mood reading? Brilliant. Let’s just automate discrimination while we’re at it.

What happens when the AI decides your "neutral" tone is actually "low value customer" and denies your refund because your last 3 messages didn’t have enough exclamation points?

And don’t even get me started on "emotion wallets"-that’s not empowerment. That’s a new form of surveillance capitalism with a pretty UI.

Also, who approved this as a serious article? It reads like a pitch deck from a startup that just watched too many Black Mirror episodes.

Wow. Just… wow. You really believe this stuff? AI reading sarcasm? Blockchain securing emotion? Please. The only thing being secured here is your investor’s exit strategy.

Let me guess-you also think NFTs are art and DAOs are democracy. This isn’t innovation. It’s vaporware dressed up as enlightenment.

And you want me to trust a machine that can’t even tell the difference between "I’m fine" and "I’m fine"? I’ve seen bots reply to grief with a thank-you note. That’s not intelligence. That’s incompetence.

Also, "emotion wallets"? That’s not a feature. That’s a nightmare. Next thing you know, your insurance company will deny you coverage because your sentiment score was too negative last month.

Wake up. This isn’t the future. It’s the same old corporate greed with a blockchain logo slapped on it.

As someone who’s worked in customer experience across 5 continents, I’ve seen how emotion is expressed differently everywhere. In Japan, silence is a complaint. In Brazil, volume is vulnerability. In Nigeria, proverbs carry the weight of paragraphs.

Most AI models are trained on English tweets from LA. That’s not data. That’s bias with a PhD.

But here’s the good news: open-source tools are leveling the field. You don’t need a $500k budget. You need curiosity. You need to listen-not just to the data, but to the people behind it.

Start small. Ask your users: "What do you wish someone understood about how you feel?" Then build from there.

Technology doesn’t need to be perfect to be powerful. It just needs to be human.

I keep thinking about what it means to be understood. Not by a person, but by a system that doesn’t have a face, doesn’t have a heart, but still knows when you’re lying when you say you’re okay.

Is that comfort? Or is it invasion?

There’s something beautiful in the idea that your frustration could be seen, validated, and acted upon-even if no human ever looked at your message. But there’s also something terrifying in knowing that your silence, your pauses, your sighs, are all being measured, cataloged, and used to decide your worth.

Maybe the real question isn’t whether AI can read emotion.

It’s whether we still know how to feel without being analyzed.

And if we don’t… who will teach us?

Just read what Nicole wrote. That’s the heart of it. The tech is just a tool. The humanity is the point.

And if we lose that, we’ve already lost.